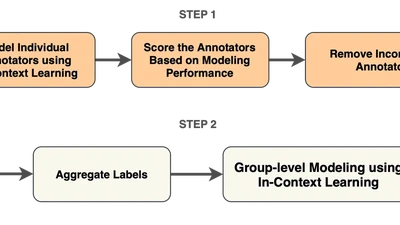

ARTICLE: Annotator Reliability Through In-Context Learning

Using LLMs to identify high-quality human annotators by checking if their labels are consistent with AI predictions—helping build better training data while preserving diverse …

Sujan Dutta

•

•

1 min readProRefine: Inference-Time Prompt Refinement with Textual Feedback

ProRefine automatically improves AI prompts during inference by having one AI agent give feedback to refine another agent's prompts—boosting accuracy by 3-37% and helping smaller …

Deepak Pandita

•

•

1 min readRater Cohesion and Quality from a Vicarious Perspective

Asking people to predict how others with different political views would label content reveals hidden biases and improves data quality for content moderation AI.

Deepak Pandita

•

•

1 min read